Differentially Private GAN

Introduction

- Propose a differentially private generative adversarial network

- Uses the Wasserstein distance which is better than JS-divergence, i.e. WGAN

Framework

- GAN : minimax game between generator and discriminator.

- Based on the Wasserstein GAN (Arjovsky et al., 2017) framework

How to achieve differential privacy during the learning algorithm?

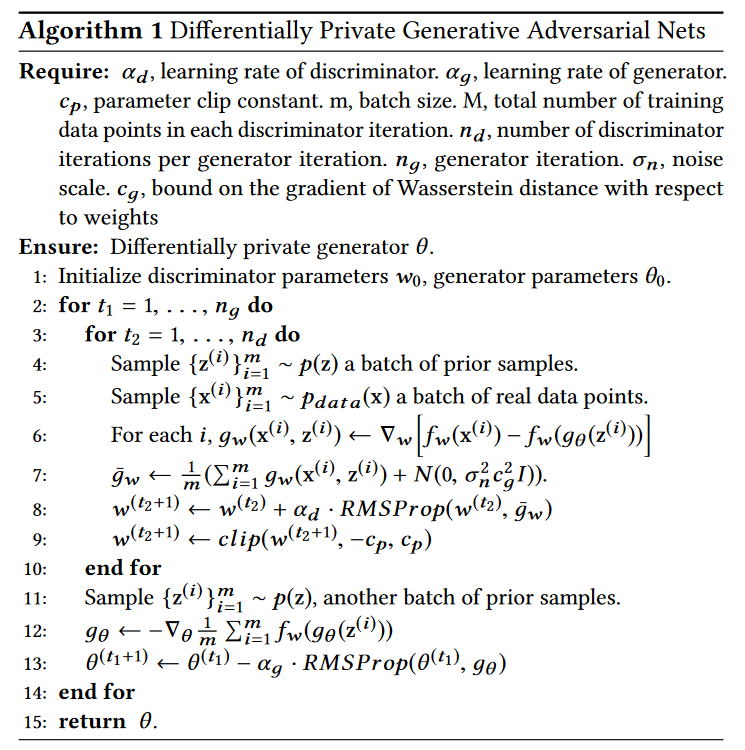

Algorithm

DPGAN Algorithm

DPGAN Algorithm

- At the 7th line of the code, Gaussian noise is added to the gradient of the Wasserstein distances.

- Line 9 : Weight Clipping (How about the gradient penalty term?)

Privacy Guaranty

- $\theta$ : Parameters of generator, defined through the discrimator parameters $w$

- $\epsilon$ : Privacy budget (smaller budget guarantees higher level of privacy)

Consider the update procedure at the algorithm above, for a fixed $t_{2}$.

\[\begin{align} &\mathbf{z}\sim p(z) : \text{a batch of prior sampes}\\ &\mathbf{x}\sim p^{\ast}(x) : \text{a batch of real data points}\\ & g_{w}(x_{i},z_{i}) \leftarrow \nabla_{w} \left(f_{w}(x_{i})-f_{w}(g_\theta(z_{i})\right)\\ &\bar g_{w}=\frac{1}{m}\sum_{i=1}^{m}g_{w}(x_{i},z_{i})+\mathcal{N}(\mathbf{0},\sigma_{n}^{2}c_{g}^{2}\mathbf{I})\\ &w^{(t_{2}+1)} = w^{(t_{2})} + \text{Gradient Descent}\\ &w^{(t_{2}+1)} =\text{clip}(w^{(t_{2}+1)}, -c_{p},c_{p}) \end{align}\]Since the procedure above produces a new output $w^{(t_{2}+1)}$ with the dataset $D$ and an auxiliary input $w^{(t_{2})}$ and noise structure, it can be regarded as an algorithm, and write as

\[\mathcal{A}_{p}(D)=M(\text{aux},D)\]Thus, we can define the privacy loss for $M$ as follows.

Definition

The privacy loss at $o$ is defined as

\[c(o;M,\text{aux},D,D') := \log \frac{\Pr(M(\text{aux},D)=o)}{\Pr(M(\text{aux},D')=o)}\]where $D,D’$ are the neighboring datasets. Also, we can define the random variable $C$ of privacy loss as follows.

\[C(M,\text{aux},D,D') := c(M(D);M,\text{aux},D,D')\]Log moment generating function

\[\alpha_{M}(\lambda;\text{aux},D,D') := \log \mathrm{E}_{o\sim M(\text{aux},D)}\left[\exp \left(\lambda C(M,\text{aux},D,D')\right)\right]\]Moments accountant

\[\alpha_{M}(\lambda) := \max_{\text{aux},D,D'}\alpha_{M}(\lambda;\text{aux},D,D')\]See (Abadi et al., 2016).

Lemma

Under the condition of Algorithm 1, assume that the activation function of the discriminator has a bounded range and bounded derivatives everywhere: $\sigma(\cdot)\le B_{\sigma}, \sigma’(\cdot)\le B_{\sigma’}$, and input space is compact so that $\Vert \mathbf{x}\Vert\leq B_{x}$. Then, $\left\Vert g_{w}(\mathbf{x}^{(i)},\mathbf{z}^{(i)})\right\Vert \le c_{g}$ for some constant $c_{g}$.

Remark. ReLU, Softplus activation functions are unbounded, but guarantees the boundness since the compactness of input space affects on the compactness of the function values.

Lemma 2

Given the sampling probability $q= \frac{m}{M}$, the number of discriminator iterations in each inner loop $n_{d}$ and privacy violation $\delta$, for arbitrary $\epsilon>0$ the parameters of discriminator guarantee $(\epsilon,\delta)$-privacy w.r.t. all the data points used in that outer loop if we choose:

\[\sigma_{n}=\frac{2q}{\epsilon} \sqrt{n_{d}\log(\frac{1}{\delta})}\]Proof. See paper

Theorem

The Algorithm 1 learns a generator which guarantees $(\epsilon,\delta)$-DP.

References

- Xie, L., Lin, K., Wang, S., Wang, F., & Zhou, J. (2018). Differentially Private Generative Adversarial Network (arXiv:1802.06739). arXiv. https://doi.org/10.48550/arXiv.1802.06739

Leave a comment