A Neural Approach to Spatiotemporal data release with Differential Privacy

Intro

- Most existing work on DP-publication of location data focuses on single snapshot releases (Cormode et al., 2012)

- To release multiple snapshot, user-level privacy is required

- Two key aspects : Bound sensitivity and Add noise, with denoising step

Preliminaries

Problem Formulation

Goal

Release high-resolution density information of dataset $D$.

- Build a $M\times M\times T$ histogram $H$ over $D$, where $M,T$ is determined spatial and temporal resolution parameter.

Queries

- Range count queries

- Given the query range(max,min of lat, lon, time)

- Return : the number of user location in $D$ that satisfy this range

- Metric : relative error metric $\text{RE}(y,u) = \dfrac{\vert y-u\vert }{\max\lbrace u,\psi\rbrace }$

- where $\psi$ is smoothing factor to avoid division by zero.

- Nearest hotspot queries

- Given query location $q$, density threshold $v$, spatio-temporal extent $SR$

- Return : closest cell to $q$ within extents $SR$ that contains at least $v$ locations.

- Metric : MAE between distance $H,\hat H$ for distance penalty. Regret (deviation of the reported density of the found hotspot from given $\hat H, v$) for hotspot density estimation error

- $\text{Regret}(q)=0$ if the reported hotspot meets the density threshold $v$.

- Forecasting queries

- Given timeseries of spatial densities and forecasting horizon $h$

- Return : The prediction of count of location reports for $h$ future timesteps.

- Metric : symmetric mean absolute percentage errors

where $A_{t}$ are the true counts from $H$ in the $h$ timesteps and $F_{t}$ are the $h$ predicted counts from a forecasting algorithm fitted to the historical data from $\hat H$.

Data

- Spatiotemporal data : have density patterns through both temporal and spatial axis. (e.g. weekday vs weekend pattern at commercial area) (Yang et al., n.d.)

- Distribution across users : power law distribution

VDRVAE-based Density Release

- Differentially private release of spatiotemporal data

Data collection

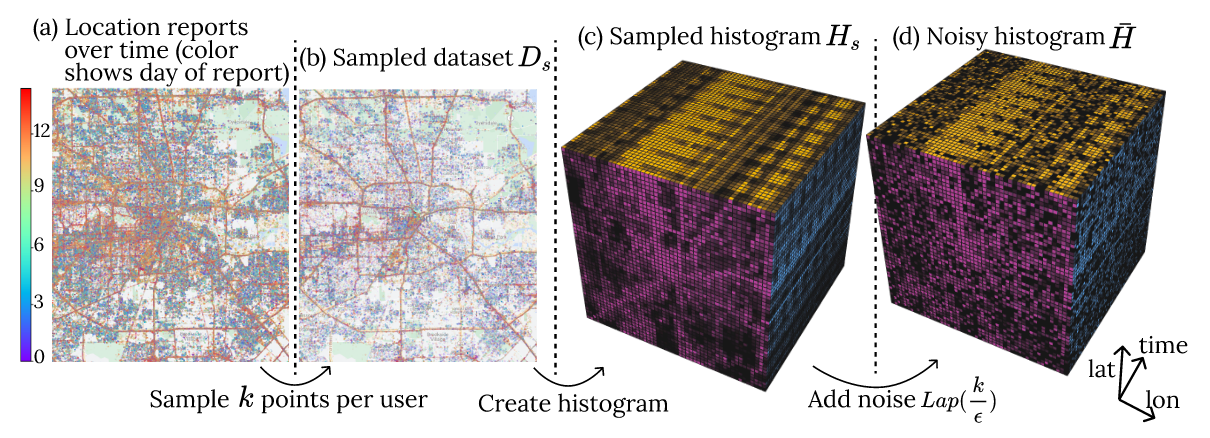

- Goal is to create the $\epsilon$-DP histogram (Figure (d)).

- Laplace mechanism with sensitivity $\Delta=k_\text{max}$ i.e. the maximum number of points.

- Bound sensitivity by sampling a maximum of $k$ points per user.

Learned Denoising

- Spatiotemporal dataset are similar to video(seq. of images)

- Regularized representation learning > learns denoised representation without overfitting

- Multi-resolution learning can capture spatio-temporal patterns at different resolution

Design Principles

-

- Denoising with regularized representation learning

-

Derive a denoised histogram $\hat H$ from VAE that minimizes reconstruction loss $\Vert \hat H - \bar H\Vert$ . By setting the dimensionality of representation lower than $\bar H$, the representation will capture the patterns in $\bar H$.

-

- Multi-resolution Learning

-

Prepare the training set with $r$ different resolution scales. Expect to improve denoising accuracy via MRL.

Algorithm

\[\begin{align} &\textbf{Algorithm } \text{Learned Denoising}\\ &\textbf{Input: } \text{Set of noisy 2D histograms, } \bar H\\ &\textbf{Output: } \text{Set of denoised 2D histograms, } \hat H \\ &\quad \bar T \leftarrow \bar H\\ &\quad \textbf{For } j\leftarrow 2 \text{ to } r \textbf{ do:} \\ &\quad \quad \textbf{For } \bar H_{i}\text{ in } \bar H \textbf{ do:}\\ &\quad \quad \quad \bar H_{i}^{j} \leftarrow \text{Histogram from aggregating } j\times j \text{ blocks of } \bar H_{i} \\ &\quad \quad \quad \bar T \leftarrow \bar T \cup \bar H_{i}^{j}\\ &\quad \text{Initialize encoder, decoder}\\ &\quad \textbf{While } \text{convergence} \textbf{ do:}\\ &\quad \quad z,\hat T \leftarrow \text{encoder}(\bar T:\theta_{e}),\text{decoder}(z:\theta_{d})\\ &\quad \quad L_{C}(\hat T) \leftarrow \sum_{i}\Vert \bar T_{i}-\hat T_{i}\Vert^{2}\\ &\quad \quad L_{G}(z) \leftarrow d(z,\Gamma)\\ &\quad \quad L \leftarrow \alpha L_{G}+L_{C}\\ &\quad \quad \theta \leftarrow \theta_{e}\cup \theta_{d}\\ &\quad \quad \text{Update }\theta \text{ in direction } -\nabla_{\theta}L \end{align}\]Model

- Given a noisy ST histogram $\bar H= \lbrace \bar H_i\rbrace _{i=1}^{T}$ where $\bar H_i$ is a spatial histogram at $i$-th timestamp.

- For regularized representation learning, use Convolutional VAE.

- $\text{encoder}(.;\theta_{e})$ : 2D histogram (any resolution) > representation $\in \mathbb{R}^{n\times l}$

- $\text{decoder}(.;\theta_{d})$ : representation > 2D histogram

- Inference : Given $\bar H_i$, obtain $\hat H$ (3D histogram).

VQ-VAE (Razavi et al., 2019)

- $\Gamma=\lbrace e_{1},\ldots,e_{B}\rbrace $ : codebook of $B$ different discrete encodings

- For training set $\bar T$, $\text{encoder}(\bar T:\theta_{e})$ produces a set of representations $z$.

- $L_{G}(z) = d(z,\Gamma)$ : regulariaztion loss. distance between representation and codebook

- $L_{C}(\hat T) = \sum_{i=1}^{\vert \bar T\vert }\Vert \bar T_{i}-\hat T_{i}\Vert^{2}$ : reconstruction loss

- Optimization becomes:

- Parameters

- $B$ : variability of the encoding space (representation power)

- $\alpha$ : How much the encoder is forced to adhere to the codebook.

- Experiment : $\alpha=1, \beta=128$

Statistical Analysis

Let $X_{i}^{c}$ be the Bernoulli random variable indicates whether $i$th point falls in a cell $c$. Suppose the $i$th point is sampled from $\text{Uniform}(D)$. Let $\mathrm{E}X_{i}^{c}=\mu_{c}$. The estimator is given by

\[\theta_{c}=\gamma \left(\sum_{i}X_{i}^{c}+\text{Laplace}\left( \frac{k}{\epsilon}\right)\right).\]Then, the bias and variance can be calculated as follows.

\[\begin{align} \text{Bias}(\theta_{c}) &= \mathrm{E}[\theta_{c}-N\mu_{c}] =\mu_{c}(\gamma n-N)\\ \mathrm{Var}\left(\theta_{c}\right)&= \gamma^{2}\left(n\mu_{c}(1-\mu_{c}+2k^{2}\epsilon^{-2}\right) \end{align}\]where $N=\vert D\vert $ is the total number of data points and $n=\vert D_{s}\vert $ is the total number of sampled data. Thus, the MSE is given by

\[\text{MSE}(\theta_{c}) = \gamma^{2}\left(n\mu_{c}(1-\mu_{c})+2k^{2}\epsilon^{-2}\right) + \mu_{c}^{2}(\gamma n-N)^{2}.\]By differentiating w.r.t. $\gamma$, we obtain

\[\gamma = \dfrac{nNC}{2mk^{2}\epsilon^{-2}+(1-C)n+Cn^{2}}\]minimizes $\sum_{c}\text{MSE}(\theta_{c})$ where $C=\sum_{c=1}^{m}\mu_{c}^{2}$ is a data-dependent constant.

Theorem

\[\begin{align} &\textbf{Algorithm } \text{VDR}\\ &\textbf{Input: } \text{Dataset } D, \epsilon, \text{ discretization resolution parameter } M,T, k,C\\ &\textbf{Output: } \text{Privatized 3D histogram } \hat H \text{ of } D\\ &\quad D_{s} \leftarrow \text{sample } k \text{ points per user in } D\\ &\quad H_{s} \leftarrow M\times M\times T \text{ histogram of } D_{s}\\ &\quad \bar H = H_{s} + \text{Laplace}\left( \frac{k}{\epsilon}\right)\\ &\quad \hat H \leftarrow \text{Denoise } \bar H \text{ via VAE}\\ &\textbf{Return } \gamma C\times \hat H \end{align}\]The following VDR algorithm is $\epsilon$-DP.

References

- Ahuja, R., Zeighami, S., Ghinita, G., & Shahabi, C. (2023). A Neural Approach to Spatio-Temporal Data Release with User-Level Differential Privacy. Proceedings of the ACM on Management of Data, 1(1), 1–25. https://doi.org/10.1145/3588701

- Razavi, A., Oord, A. van den, & Vinyals, O. (2019). Generating Diverse High-Fidelity Images with VQ-VAE-2 (arXiv:1906.00446). arXiv. https://doi.org/10.48550/arXiv.1906.00446

- Cormode, G., Procopiuc, C., Srivastava, D., Shen, E., & Yu, T. (2012). Differentially Private Spatial Decompositions. 2012 IEEE 28th International Conference on Data Engineering, 20–31. https://doi.org/10.1109/ICDE.2012.16

Leave a comment